Systematic reviews and meta-analyses identify, appraise and synthesise all evidence on a specific research question. They are considered the highest level of evidence, help physicians stay up to date and enable them to make informed clinical decisions1. It is therefore not surprising that this study design has become increasingly popular2,3.

Inevitably, the phenomenon of duplicate meta-analyses is also increasingly common. A recent study showed that more than half of meta-analyses have at least one overlapping meta-analysis, and some topics had up to 13 overlapping meta-analyses2. While some degree of duplication is warranted in research, large numbers of overlapping meta-analyses seem unnecessary and could reflect wasted efforts and inefficiency in the process of summarising evidence2. In addition, the interpretation of evidence becomes confusing if the conclusions of duplicate meta-analyses are discordant.

In this paper, we review the current practice of meta-analyses in cardiovascular medicine, the implications of overlapping meta-analyses, and provide recommendations on the interpretation and prioritisation of (duplicate) meta-analyses.

The increasing popularity of meta-analyses

The increasing popularity of meta-analyses is illustrated in Figure 1. A PubMed search showed that the number of meta-analyses in the cardiovascular field has increased almost 1800% between 1993 and 2012, whereas the number of randomised controlled trials (RCTs) increased by only 140% in the same period. In 1993, on average 28 RCTs were published for every meta-analysis, whereas this RCT:meta-analysis ratio was 2.7:1 in 2012. This trend is an indication of the relative growth of meta-analyses as compared with other published research and was seen both in the cardiovascular discipline (Figure 1A) as well as in other medical disciplines (Figure 1B). Between 1993 and 2013, on average 18% of all meta-analyses concerned a cardiovascular topic. This proportion has remained stable over time.

Figure 1. Number of annually published meta-analyses and RCTs in (A) the cardiovascular field and (B) all disciplines. The red and blue bars represent the annually published RCTs and meta-analyses, respectively. The green line represents the number of published meta-analyses compared with the number of published RCTs in each year. It is an indication of the relative growth of meta-analyses as compared with the overall growth of published research in the cardiovascular field. Data are based on the following PubMed searches: A) (randomi* OR meta-analysis [ptyp]); B) (randomi* OR meta-analysis [ptyp]) (“Cardiovascular Diseases”[Mesh]). N: number; RCT: randomised controlled trial

This increasing popularity has led to duplicate meta-analyses on the same topic4. A recent study investigated overlapping meta-analyses on the same topic by assessing a randomly selected 5% of all published meta-analyses in 2010. The authors found that 67% of all meta-analyses had at least one overlapping meta-analysis that did not represent an update, and 5% of the research questions were investigated in at least eight overlapping meta-analyses2. Replication of research generally leads to more knowledge and confidence in the conclusions, but could also represent wasted time and effort. Some authors suggest that four or more meta-analyses on the same topic with similar eligibility criteria and outcomes is too many, but there is no specific number regarding the correct amount of duplication4.

Examples of overlapping meta-analyses

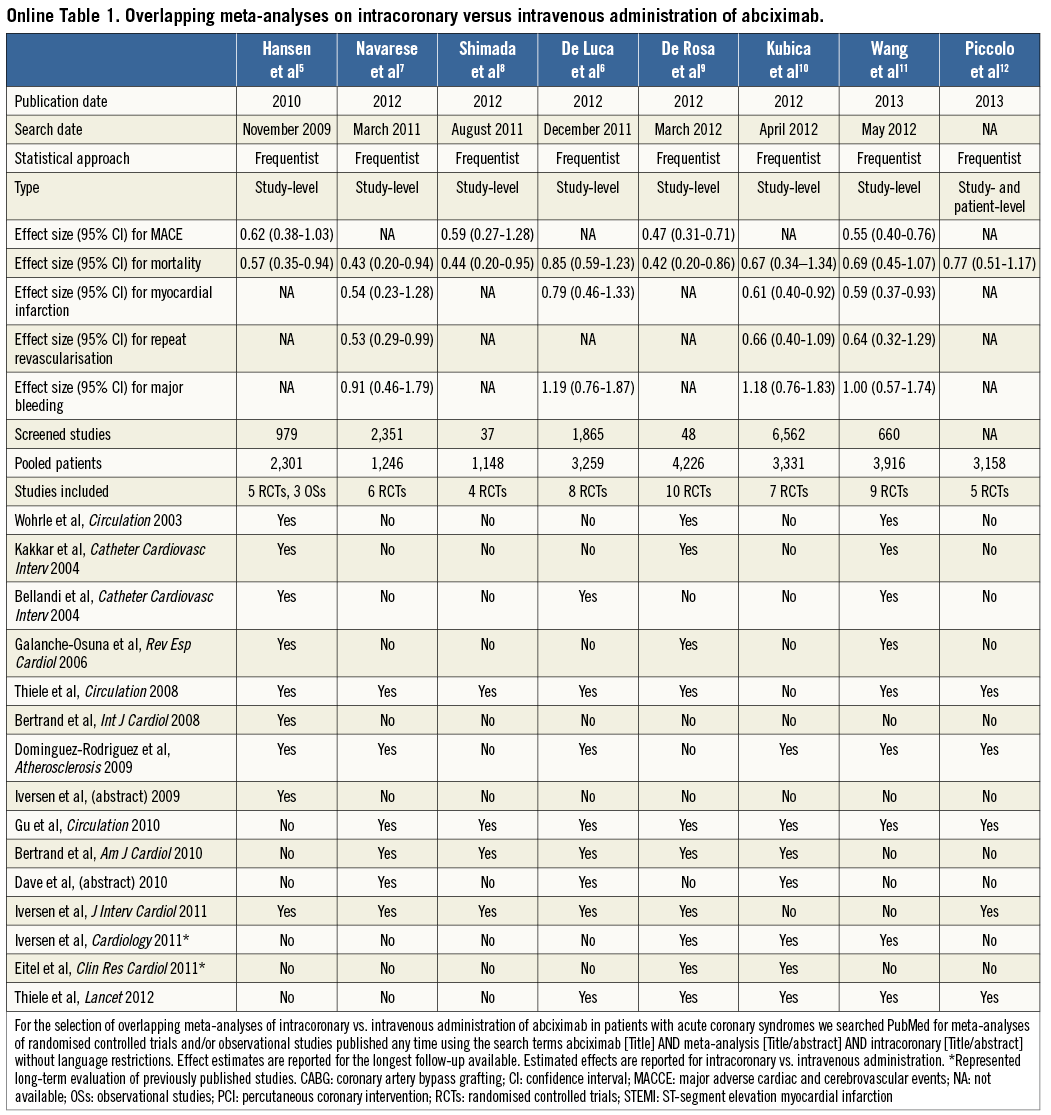

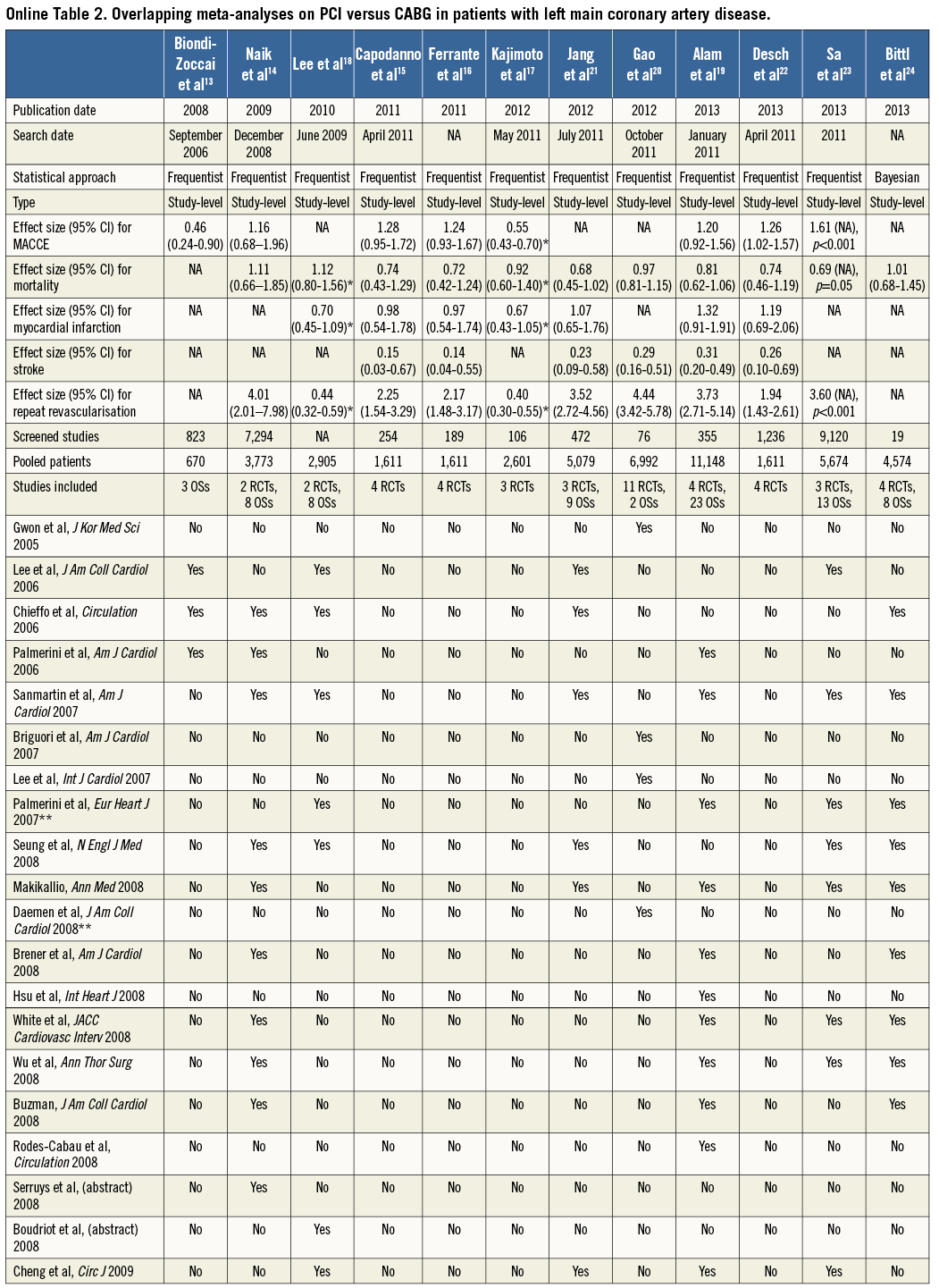

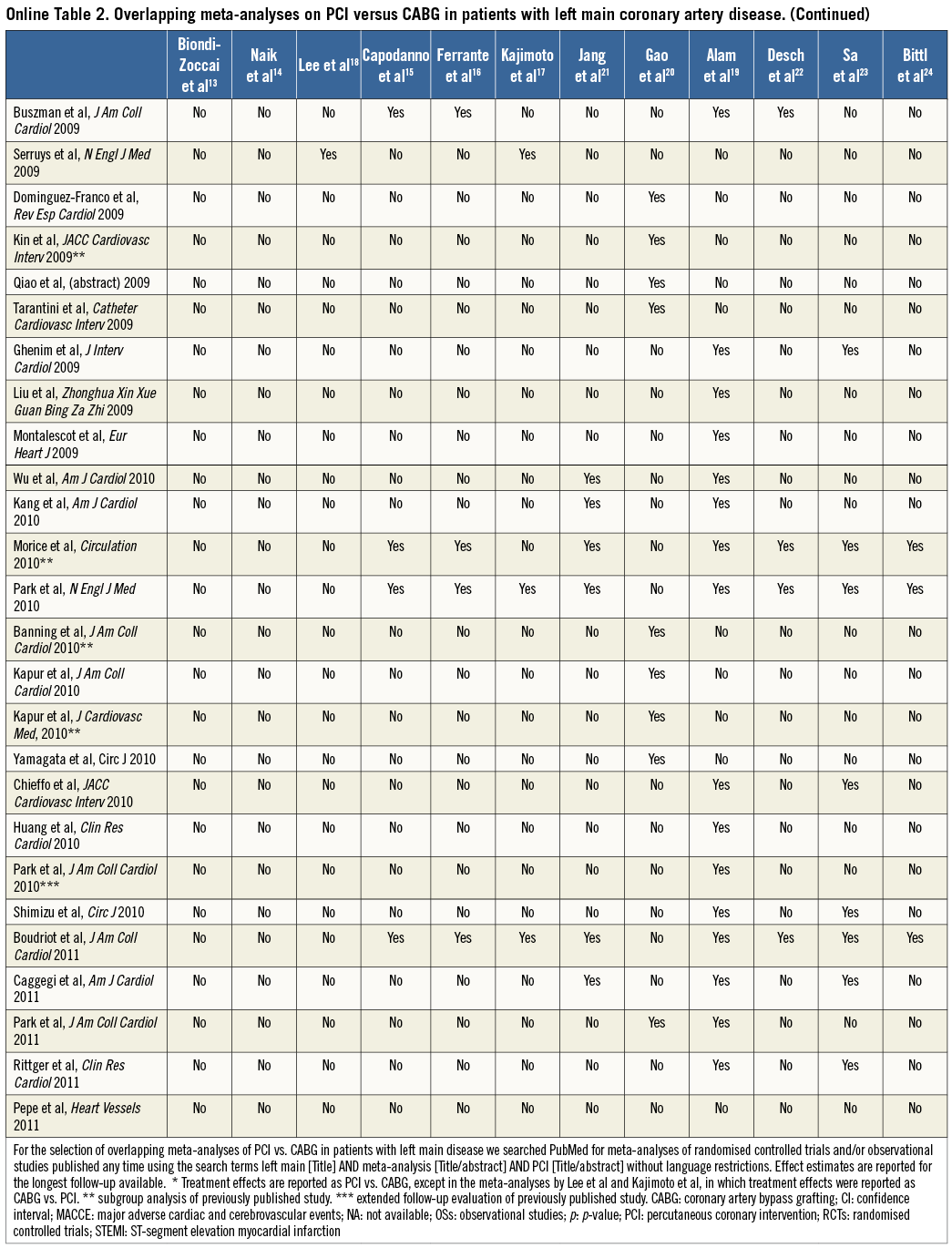

Two case examples of overlapping meta-analyses in the cardiovascular field are illustrated in Table 1, Online Table 1 and Table 2, Online Table 2, respectively. For each meta-analysis, we extracted information on the year of publication, search date, treatment effect for outcomes of interest, number of studies screened and selected, and patient population. We also noted first author, journal and year of publication of the studies included and combined in each meta-analysis.

Through a PubMed search, seven overlapping meta-analyses of intracoronary versus intravenous administration of abciximab in patients with acute coronary syndromes were identified5-11. An additional meta-analysis with patient-level data on the same topic is published in this issue of the Journal12. The meta-analyses were published between 2010 and 2013 (88% in 2012-2013), and the number of primary studies included ranged between four and ten (Table 2). Seven meta-analyses included only RCTs, and one meta-analysis comprised both RCTs and observational studies (OSs). The search dates ranged from November 2009 to May 2012, which was reflected in the number of screened studies (from 37 to 6,562). The treatment effect for mortality was reported in all meta-analyses but was of inconsistent statistical significance: four (50%) meta-analyses found a statistically significant benefit of intracoronary abciximab administration, whereas four studies (50%) did not. Similarly, the risk of major adverse cardiac events (MACE) was significantly reduced in only two of four (50%) meta-analyses reporting on this outcome, the risk of myocardial infarction in two of four (50%), and the risk of repeat revascularisation in one of three (33%). Of four meta-analyses that sought to assess the risk of bleeding, none (0%) found a significant difference between intracoronary and intravenous administration.

Another PubMed search identified twelve meta-analyses of PCI vs. CABG in patients with left main coronary artery disease. These meta-analyses were published between 2008 and 2013 (58% in 2012-2013) and the number of primary studies included ranged between 3 and 27 (Table 3)13-24. Four meta-analyses included only RCTs, one meta-analysis comprised only OSs and seven meta-analyses included both RCTs and OSs. The authors’ search dates varied from September 2006 to April 2011, and the number of screened studies ranged between 12 and 9,120. Mortality was reported in eleven meta-analyses, all of which found no statistically significant benefits of either treatment. MACCE was reported in eight meta-analyses, of which three (38%), one (13%) and four (50%) found a higher, lower or similar risk for this composite endpoint after PCI versus CABG. All meta-analyses that reported an effect size for myocardial infarction (n=7) found no statistically significant difference between treatments. Also, all ten meta-analyses that investigated repeat revascularisation found significantly higher rates after PCI than after CABG. On the other hand, stroke was significantly higher with CABG in all six meta-analyses reporting this outcome.

Taken together, these findings indicate that meta-analyses on the optimal administration route for abciximab and the optimal treatment strategy for left main revascularisation published in the last five years differed not only in the magnitude of the treatment effect for some outcomes, but also occasionally in the direction of the effect (e.g., MACCE in the left main meta-analyses). In the illustrative examples above, these differences might be attributed to varying eligibility criteria regarding inclusion of OSs, the target population analysed (e.g., acute coronary syndromes or ST-segment elevation myocardial infarction in the abciximab route meta-analyses; patients with diabetes mellitus or acute coronary syndromes in the left main revascularisation meta-analyses), and the non-consideration of studies published after the search date of each meta-analysis. In contrast, while more recent meta-analyses might have included newly published studies, their incremental value remains uncertain (e.g., similar results were noted in all meta-analyses of left main revascularisation with regard to all the components of MACCE). Interestingly, three meta-analyses of left main revascularisation included exactly the same four RCTs but derived slightly different summary effects, underscoring the potential for differences introduced at the stage of data synthesis15,16,22.

What to do when meta-analyses overlap

Overlapping meta-analyses can result in uncertainty when they come to discordant conclusions. Discordance can occur at the level of results or interpretation, and the underlying sources are summarised in Table325,26. Effect sizes can differ because some meta-analyses use slightly different eligibility criteria for study selection, such as the eligibility of abstracts or language restrictions. Perhaps more subtle are discordances due to handling and interpretation of heterogeneity and publication bias.

Heterogeneity is an apparent difference between the results of the primary studies27,28, and may be present when study populations, interventions, outcomes, or methodologies differ across the studies. Heterogeneity is generally quantified by the I2 or Cochran’s Q-statistic29. To evaluate heterogeneity, authors should not only examine the statistic, but also scrutinise potential sources of heterogeneity by comparing primary study characteristics, design, follow-up duration, patient characteristics and outcome definitions30. Meta-regression is a typical approach to relate sources of variation in heterogeneous treatment effects to specific study characteristics. However, study-level meta-analyses have some limitations in explaining heterogeneity, and using individual patient data in patient-level meta-analyses may lead to a more unbiased assessment31. In addition, patient-level meta-analyses allow better alignment of definitions and follow-up. This is illustrated by the above-mentioned meta-analysis by Piccolo et al, which pooled individual patient data from trials of intracoronary versus intravenous administration of abciximab, enabling investigation of detailed endpoints such as post-procedural Thrombolysis In Myocardial Infarction (TIMI) 3 flow, myocardial blush grade and complete ST-segment resolution12.

Publication bias is the tendency by investigators, reviewers and editors to submit or accept manuscripts for publication based on the direction or strength of the study findings32. Tests that assess publication bias include funnel plots, Harbord-Egger tests, and trim and fill analyses33-35. If these tests identify missing studies with a smaller effect or an effect in the opposite direction, investigators should be very careful with their conclusions regarding the presence and/or direction of the association under study.

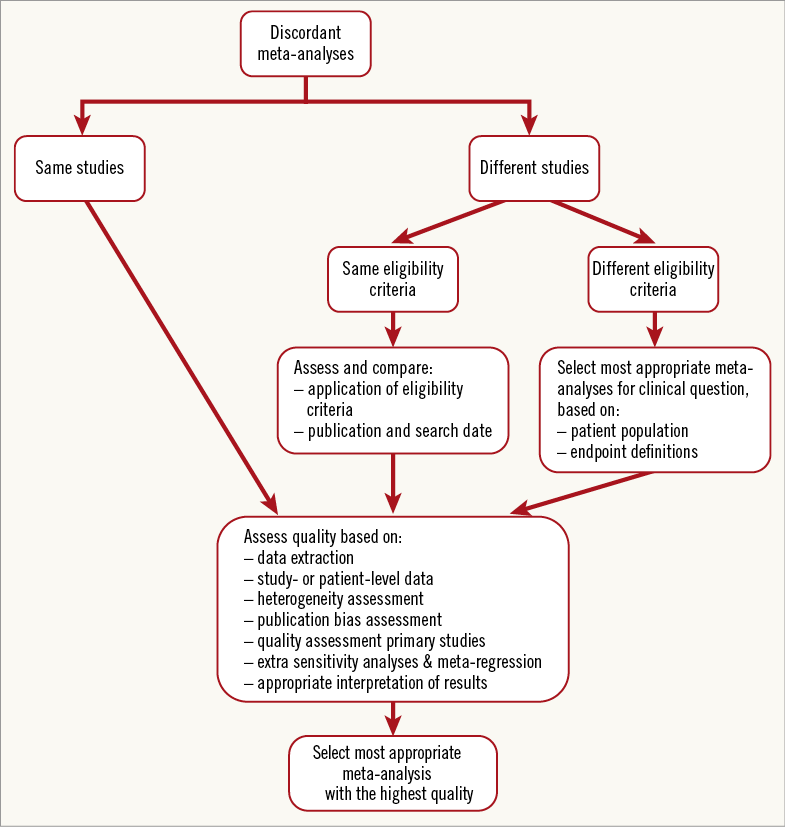

Discordant meta-analyses form challenges for authors, clinicians and editorial boards. Which meta-analysis is most applicable to the clinical question, and which one is methodologically most solid? A flow chart to help with the interpretation of discordant meta-analyses is provided in Figure226. When meta-analyses truly study the same question, the flow chart guides the reader to methodological appraisal of the discordant meta-analyses. Quality scoring lists might be useful as well, such as the Oxman Guyatt list and the AMSTAR checklist36,37. These checklists can be used to map the methodological quality of meta-analyses. AMSTAR includes questions on design (e.g., “was there duplicate study selection and data extraction?”; “was a comprehensive search performed?”), analysis (e.g., “was the scientific quality of the included studies documented?”; “were the methods used to combine the findings of the studies appropriate?”), and interpretation (e.g. “was the scientific quality of the included studies used appropriately in formulating conclusions?”). The use of scoring systems for assessing quality seems easy and attractive, and AMSTAR is a validated quality measurement tool. On the other hand, calculating these summary scores involves assigning weights to different items in the scale and thus prioritising studies based on arbitrary assumptions. Using full reporting of how meta-analyses were rated based on each criterion is preferable.

Figure 2. Flow chart for the interpretation of discordant, overlapping meta-analyses. The flow chart helps the reader interpret overlapping, discordant meta-analyses, by guiding him/her to a methodological appraisal. Adapted from Jadad et al26.

How to preserve the value of meta-analyses

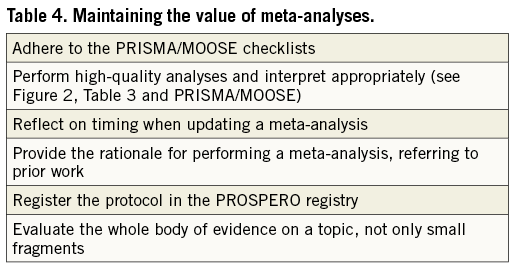

A list of considerations for maintaining the value of meta-analyses and for improving the quality of research in this field is provided in Table 4. Adherence to accepted guidelines for reporting is essential to preserve the quality and value of meta-analyses. The PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses, formerly QUORUM) statement consists of a 27-item checklist and a four-phase flow diagram aimed at improving the consistency and completeness of reporting of meta-analyses of RCTs38. An analogous document has been elaborated by the MOOSE (Meta-Analysis Of Observational Studies in Epidemiology) group for meta-analyses of OSs39.

Because the evidence on a topic is typically dynamic and evolves over time, incorporation of new studies into an existing meta-analysis may lead to different conclusions40. Additional incentives for updating a meta-analysis may include the potential availability of new tools or markers to characterise subgroups41, the introduction of new outcome measures42, or even advances in the methodology for conducting a systematic review/meta-analysis24. However, the merits of publishing a new meta-analysis on the same topic need to be evaluated, since redundant overlapping meta-analyses reflect waste of resources and potentially add confusion. Authors of possibly overlapping meta-analyses should report the rationale for performing the meta-analysis (e.g., outdated and/or low-quality previous meta-analyses). The PICO (Population, Intervention, Comparator, and Outcome) framework could be used to point out what aspect of the research question has changed. Optimal timing for a new meta-analysis depends on the speed of scientific progress in the specific field and the importance of the research question. Periodic literature surveillance, expert opinions and scanning of abstracts are helpful to identify new relevant evidence that may eventually be used for an updated meta-analysis. Once the need for updating a meta-analysis has been identified, the update should be performed properly and effectively. Technically, a previous search strategy can be useful, and specific statistical methods for updating a meta-analysis have been described, such as “cumulative meta-analysis” and “null meta-analyses ripe for updating” approaches43,44. Bayesian methodology for meta-analysis might provide a way to update and/or consolidate the evidence on a topic. In contrast to the frequentist approach, Bayesian statistics incorporate clinical judgement and pertinent information that would otherwise be excluded, and establish inferences based on a wide range of flexible methods based on the theory of conditional probability24,45,46.

An important potential strategy to avoid multiplication of unnecessary meta-analyses is consultation of dedicated registries. For instance, the PROSPERO registry (http://www.crd.york.ac.uk/NIHR_PROSPERO) includes over 2,000 prospectively registered protocols of systematic reviews and meta-analyses in health and social care47-49. Registering meta-analyses into a central database, similar to registration of trials into www.clinicaltrials.gov, helps to avoid unplanned duplication, increases transparency in the review process, and enables assessment of the results of reported reviews versus what was initially planned by the authors in the protocol. While authors increase the reputation of their work, journal editors are provided with a safeguard against flawed methodologies.

Finally, meta-analyses should be comprehensive and not only evaluate small fragments of the evidence on a clinical question of interest50. To address this issue, umbrella reviews and network meta-analyses are gaining attention24,51. Umbrella reviews consider multiple treatment comparisons for the management of the same disease or condition, with each comparison considered separately and clustered meta-analyses performed as appropriate52,53. A treatment network typically uses nodes for each available treatment, and each link between the nodes reflects a comparison of treatments in at least one or more primary studies. Compared with classic meta-analyses, umbrella reviews and network meta-analyses provide the reader with a wider vision on many treatments for a given condition, although typical limitations of standard meta-analyses (e.g., inherent bias of studies included, heterogeneity and publication bias) continue to apply.

Conclusions

The explosive dissemination of meta-analyses entailed the publication of duplicate meta-analyses on the same topic. The scope of a meta-analysis is to provide the reader with the most up-to-date evidence on the effect of an intervention and increase the statistical power of treatment comparisons for a given condition beyond that of individual studies, with the ultimate goal of informing clinical practice and guiding healthcare decisions. To reflect the evolving knowledge on a topic, meta-analyses are regularly updated as new studies become available. However, redundancy of overlapping meta-analyses on the same topic is frequently obvious and reflects waste of time, energies and economic resources. Considerations regarding heterogeneity, publication bias and quality of primary studies serve as a basis to appreciate the evidence across overlapping meta-analyses. Raising the quality of research is a collective effort of authors, peer reviewers, editors and other players in the field. When preparing and submitting a meta-analysis, authors should take responsibility for advancing the field by adhering to the appropriate reporting guideline, reporting the rationale for performing the (updated) meta-analysis, registering their project in a dedicated database and evaluating the whole body of evidence. Similarly, peer reviewers and editorial boards should carefully evaluate the additional merits of the meta-analysis under review over previous work, thereby filtering out inappropriate meta-analyses, avoiding confusion and maintaining the value of meta-analyses.

Conflict of interest statement

The authors have no conflicts of interest to declare.

Online data supplement