“I like to think of the meta-analytic process as similar to being in a helicopter. On the ground individual trees are visible with high resolution. This resolution diminishes as the helicopter rises, and in its place we begin to see patterns not visible from the ground.” Ingram Olkin

As explained in the metaphor by the meta-analysis pioneer Ingram Olkin, systematic reviews are viewpoints on a given topic quoting primary authors or studies (i.e. reviews), which deliberately use and report a systematic approach to study search, selection, abstraction, and appraisal. Meta-analyses are studies (not necessarily reviews) which use specific statistical methods for pooling data from separate datasets. This issue of EuroIntervention includes two systematic reviews with meta-analytic pooling focusing, respectively, on the impact of cilostazol on restenosis and repeat revascularisation rates after coronary stenting,1 and devices for prevention of distal embolisation in acute myocardial infarction.2 The diffusion of this research study type even in the journal of European interventionists is a further proof of the scientific validity, cost-effectiveness,3 clinical impact, and high quotability of meta-analyses,4 despite several concerns on their recent plethora.5

Is publication of any given study in a peer-reviewed journal such as EuroIntervention definitive evidence of its internal validity and usefulness for the clinical practitioner or researcher? Unfortunately not. Peer-review is not very accomplished in judging or improving the quality of scientific manuscripts and many examples of bad or unsuccessful peer-reviewing efforts can be easily found. However, in as much as democracy, in the words of Sir Winston Churchill (“democracy is the worst form of government except all those other forms that have been tried from time to time”), peer-review is the worst form to evaluate and appraise scientific research except all other methods that have been tried so far. This applies to all clinical research products in general and also, of course, to systematic reviews and meta-analyses. Thus, provided that meta-analyses are accurately and thoroughly reported, the burden of quality appraisal lies largely, as usual, in the eye of the beholder (i.e. the reader).

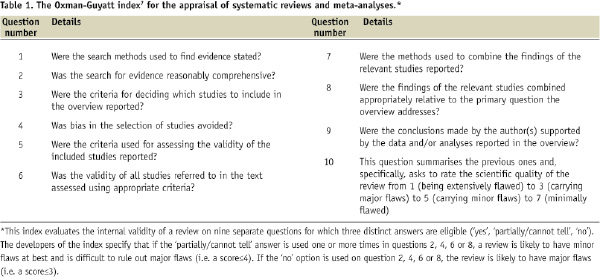

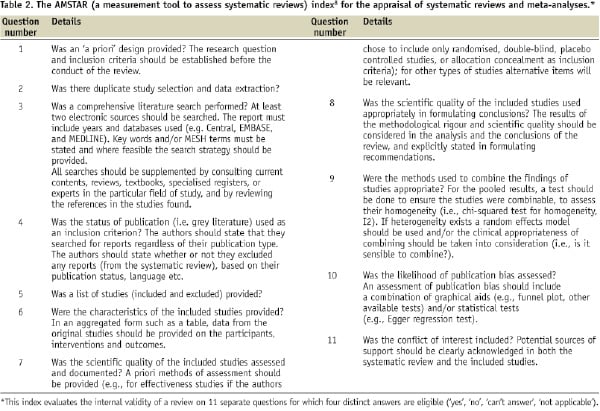

We provide, hereby, some simple suggestions to appraise the quality of systematic reviews and meta-analyses exploiting the two interesting systematic reviews published in this issue1,2 (similarly to what has been done in the last few years by the “For Dummies” collection of paperback manuals).6 Those looking for more precise and structured appraisal tools can refer to the the Oxman-Guyatt tool7 and the AMSTAR index,8 available in, respectively, Tables 1 and 2.

The two-step approach

Our simple two-step approach is not very original, being a simplification of the evidence-based medicine (EBM) approach for the evaluation of sources of clinical evidence, but nonetheless quite helpful.9 Indeed, reminding the reader a brief definition of EBM is timely and appropriate: EBM is “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients”.9 It must also be stressed that “the practice of evidence-based medicine requires integration of individual clinical expertise and patient references with the best available external clinical evidence from systematic search”.9 Systematic reviews and meta-analyses, if well conducted and reported, help us in reducing our effort in looking for, appraising, and summarising the evidence. But the burden of deciding what to do with the evidence obtained for the care of our individual patient remains ours.

Thus, the first step in appraising a systematic review and meta-analysis is trying to find an answer to the question: can I trust it? In other words, is this review internally valid (i.e. does it provide a precise and largely unbiased answer to its scientific question)? Providing a definitive appraisal of the internal validity of a systematic review is not simple (see Tables 1 and 2), but it largely depends on the methods employed and reported regarding study search, selection, abstraction, appraisal and, if appropriate, pooling.

Even if we can conclude that a given meta-analysis is internally valid, we still have to face the second step in its appraisal. This second step mainly involves the external validity of the study. In other words, can I apply the review results to the case I am facing or will shortly face? It more basically means answering the question: so what? Decisions on external validity are highly subjective and may change depending on the clinical, historical, logistical, cultural or ethical context. Nonetheless, systematic reviews and meta-analyses can improve our appraisal of the external validity of any given clinical intervention, by suggesting its overall clinical efficacy (or lack of it).

In the following sections we will focus more practically on the systematic reviews published in this issue of EuroIntervention,1,2 and provide the results of our two-step approach in their appraisal.

The first step: can I trust it?

Can I trust the review by Tamhane et al1 on the beneficial anti-restenotic effect of cilostazol after coronary stenting? I would conclude positively. They have searched several databases and conference proceedings, study selection was performed by two reviewers, with data abstraction followed by a thorough study quality appraisal. Endpoints were clearly spelled out and appropriately pooled with random and fixed-effect models, with the addition of several tests for small study bias. Despite the reliance on angiographic follow-up, which may inflate clinical restenosis rates and lead to more non-ischaemia driven revascularisations, their stance that cilostazol reduces restenosis and repeat revascularisations after coronary stenting is trustworthy.

Can I also trust the meta-analysis by Inaba et al2 on devices for distal embolisation in acute myocardial infarction, showing that most beneficial findings in favour of these devices stem from single-centre studies? Even a quick look at their paper clearly suggests a positive answer. They performed a comprehensive literature search without language restrictions, with two reviewers independently selecting only high-quality studies, abstracting the data and scoring the internal validity of the included randomised trials. Finally, statistical pooling was performed with established methods complemented by meta-regression analyses exploring for the impact of major covariates. Their main focus was on looking for explanations of the apparent differences between individual studies focusing on this clinical research question. They compared the role of several covariates on the results of devices for distal protection, finding that single-centre studies were more likely to report beneficial results for these devices than multicentre studies. Thus, despite the post hoc feature of most of their comparisons and the risk of ecological fallacy (whereby inferences about the nature of specific individuals are based solely upon aggregate statistics collected for the group to which those individuals belong), their conclusions are trustworthy.

The second step: so what?

So what? In other words, what is the external validity of the review by Tamhane et al?1 This question is more difficult to answer, and depends on several factors. For instance, cilostazol is not available in Italy, nor in other European countries. Second, the cost and duration of any cilostazol treatment should be factored in and formal cost-effectiveness studies should be completed. Third, I would consider cilostazol currently only in patients at very high risk of restenosis (e.g. diabetics or those with chronic renal failure), whereas a larger usage could be foreseen if the antiplatelet effects of cilostazol are confirmed, so that it could be used to further reduce the risk of stent thrombosis in those more prone to this adverse event.

After reading the article by Inaba et al,1 what should I practically do? In other words, what is the external validity of their work? Should I use devices for distal protection only when I am participating in single-centre trial, because otherwise they are unlikely to be beneficial? Of course this simplistic attitude is almost never correct. More humbly, I could conclude that single-centre studies may provide more significant results because expertise and experience with a given device are much greater, but come at the expense of a higher risk of bias (e.g. lack of allocation concealment or appropriate blinding of assessors), in comparison to multicentre trials.

Take home messages

It is clear that appraising the internal validity, and even more the external validity, of any research endeavour, including the meta-analyses by Tamhane et al1 and by Inaba et al,2 is highly subjective, and thus we leave ample room to the reader to enjoy them and appraise them on his or her own. The only issue that is worth being further stressed is that only collective and constructive, but critical post-publication appraisal of scientific studies can put and maintain them into the appropriate context for their correct and practical exploitation by the clinical researcher and the clinical practitioner.

Acknowledgements

This work is part of a senior investigator research program of the Meta-analysis and Evidence-based medicine Training in Cardiology (METCARDIO) Center, based in Turin, Italy (http://www.metcardio.org).