Using simulation to train healthcare professionals is not new. Resuscitation training with manikins enables us to practise, make mistakes and receive feedback on our performance in a way which is simply not possible in real-life clinical scenarios. We practise simulated patient encounters with actors or manikins, and use procedural models when practising skills such as vascular access or intercostal drainage. Emergency drills are also run using simulators in clinical areas to train staff and identify potential problems when the real situation is encountered. Common to these scenarios are both replication of clinical situations for training or assessment, and supervision from an educator.

There has been interest in extending simulation training to the performance of complex procedural skills. The theoretical advantages of perfecting technique and reducing the risk of complications without exposing patients to harm are attractive, but the evidence to support these assumptions has been slow to follow because of difficulties in designing and conducting robust studies to establish whether hard clinical endpoints can be attributed to specific training methods. There are many studies focusing on surrogate outcomes such as reaction and performance in simulation; however, recent studies have shown improvement in patient outcomes. One large study examined the effect of simulation training upon central venous catheter insertion and found a significant reduction in the number of infections, with cost savings for hospitals1,2. There have also been studies examining more complex surgical and endovascular procedures such as laparoscopic surgery and carotid artery stenting. One novel study even demonstrated that performing the first procedure of a list in simulation as a “warm-up” led to a reduction in errors3.

The present study by Jensen et al contains small numbers but is an elegant demonstration of the potential that simulation training holds, when adequately resourced and supported. Eight trainees who underwent an intensive simulation training programme in cardiac catheterisation demonstrated shorter fluoroscopy screening and total procedure times, and an improvement in expert assessed performance and error scores, than a similar group which continued with conventional training. All participants were within the second half of a five-year cardiology training programme. Only two in each group had had prior experience of cardiac catheterisation and none had previously performed the procedure themselves. The simulation group underwent a comprehensive programme of training, including expert supervised sessions, unsupervised practice and proficiency assessment, ensuring all had achieved a required level of competency in simulation before being allowed to proceed to the assessment on live patients. What is not specified is the nature of the training, if any, that the control group received4. One would expect that a group receiving intensive skill training in whatever form would outperform a control group receiving no training, but an important question is what the contribution of the simulator was in this case or whether expert mentorship alone would have provided similar improvements in performance. There is evidence that following a “mastery” learning model, as in this study, is superior to a fixed curriculum unresponsive to the learner.

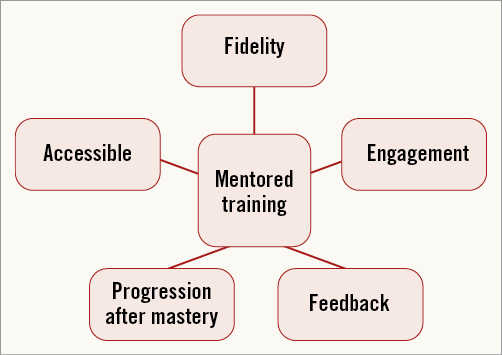

In a systematic review and meta-analysis, Issenberg et al identified factors which led to effective learning in simulation. These features are important to consider when implementing a simulation programme, which may prove ineffective or even detrimental if not appropriately conceived5. Figure 1 illustrates some important features to consider.

Figure 1. Important features to consider in implementing simulation.

Simulation may accelerate the learning curve for novel operators at the start of the learning curve where complications are most commonly seen6,7. Training using real patient lists requires the procedure to be completed in a linear fashion: each patient has attended for a complete procedure, including angiography suite preparation between patients and reporting. Whilst it may be possible for the mentee to focus on specific aspects of the procedure for each case, using simulation, single elements of a procedure can be repeated continuously without break until competence has been met, after which the next stage of the procedure can be practised in a similar fashion, until all of the required steps for the procedure have been mastered. The flexibility afforded by this approach allows the mentor and trainee to focus on specific areas of weakness whilst progressing through areas which are already mastered rapidly.

The contribution of fidelity should also be addressed. This is the realism of the experience, incorporating the external appearance of devices, the angiographic image and tactile aspects. This is an important contributor to the response of the learner to the simulation and hence the degree to which they are able to immerse themselves as if they were performing on a live patient. It should not be assumed, however, that a simulator which recreates the procedure with high fidelity alone will lead to expert acquisition of the skill in question. The current generation of angiographic simulators demonstrates a remarkable feat of technology but, as anyone who has used them will confirm, there is still a distance between the experience using them and real-life procedures which requires a degree of suspension of reality. Undoubtedly, as the technology improves, this gap will narrow but this remains one of the most commonly encountered objections from those who do not wish to adopt simulation into their own practice.

The importance of feedback cannot be understated. Learners left to practise and find their own way do no better than those who do not undergo simulation training, and may acquire potentially harmful behaviours. There are those who will not be motivated to engage with the learning process, and without support from mentors will probably view simulation as a waste of time and resources which could be spent on gaining clinical experience. There is also the need for the experienced operator to set the curriculum for learning, advancing the difficulty of cases for the learner progressing well, or placing increased emphasis on areas of difficulty for others. Continuing to repeat areas already mastered is again not a good use of time or resources.

Deploying simulation therefore has the potential to be hugely beneficial in accelerating the learning curve for trainees, and in particular reducing patient risk when they are at their most inexperienced; however, it is a resource-intensive exercise. Programmes such as that employed in the Jensen study require considerable investment and backing from training institutions to ensure that the level of training is sustainable; this applies to both equipment purchase and maintenance and faculty time. In our training region, we invite all those commencing training in cardiology to a two-day programme where they receive individually mentored training on basic catheter angiography, pacing and vascular access – an event which requires the equivalent of 20 consultant-days to run. This has proven to be well received by the trainees, with feedback suggesting that they are more confident in procedural performance following this. However, continuing this level of support when they return to individual hospitals to gain hands-on practice is more challenging in the reality of busy schedules and limited simulator availability. In an ideal model of practice, each trainee and their mentor would have ready access to a simulator in a location convenient to clinical working areas such as the catheter suite, and regular scheduled time to make use of this following a curriculum which adapts to individual learning needs. As the evidence for the impact of simulation on patient safety mounts and the technology improves, one would expect this model to become standard practice.

Conflict of interest statement

The authors have no conflicts of interest to declare.