- impact factor

- scientific assessment

- Hirsch-factor

- Thomson Reuters

Abstract

The most used scientific evaluation parameters today are: 1) The impact factor (IF) of scientific journals in which the papers of researchers, and their collaborators, are published and 2) The so-called H-factor which is used to evaluate the work of individual scientists. We explore in detail these particular parameters. Also we briefly discuss alternative forms of assessment in the modern age.

Impact factor of scientific journals

The impact factor (IF) is a measure which reflects the average number of citations to articles published in science journals. The impact factor was created in 1955 by Eugene Garfield1, who was the founder of the Institute for Scientific Information (ISI), located in Philadelphia in the United States. ISI now forms a major part of the science division of Thomson-Reuters company. Impact factors are calculated yearly for those journals that are indexed in Thomson Reuter’s Journal Citation Reports (JCR). The IF of a journal is the average number of citations received per paper published in that journal during the two preceding years. The formula is the following:

2010 impact factor = A/B

Where A = the number of times articles published in 2008 and 2009 were cited by indexed journals during 2010 and B = the total of “citable items” published by that journal in 2008 and 2009. (Citable items are usually original articles, expert reviews, proceedings or notes, often editorials and/or letters-to-the-editor are non-citable items).

As can be determined from this formula, a journal can only receive an impact factor after being indexed in the database for three consecutive years. This database is the so-called Web-of-Science, which is maintained by Thomson-Reuters. To gain entry to the database, the journal is evaluated by a select committee. Many factors are taken into account when evaluating the journal for entry, ranging from the qualitative to the quantitative. The journal’s basic publishing standards, its editorial content, the international diversity of its authorship, and the citation data associated with it are all considered.2

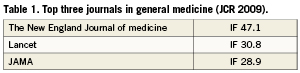

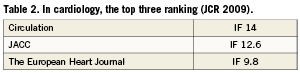

The impact factor, then, on its own, is perhaps a meaningless number but gains obvious merit when comparing against other journals in the same research field. An indication of a journal’s IF based on the JCR published in 2009 can be found in Table 1 and Table 2. It is noteworthy that half of all indexed journals in the Web-of-Science database have an impact factor equal or lower than 1.

The H-factor of an individual scientist

Another, increasingly used scientific parameter is the so-called H-factor (or Hirsch-factor, Hirsch-index or Hirsch-number). This scientific evaluation parameter is today used in many institutes to determine if an individual scientist is ready to climb the next step of the scientific ladder. The H-factor is an index that attempts to measure both the productivity and the impact of the published work of a scientist or scholar. The index is based on the set of the scientists most cited papers and the number of citations that they have received in other peoples publications. Eventually it could also be applied to evaluate the productivity of a group of scientists or a department or even the complete university.

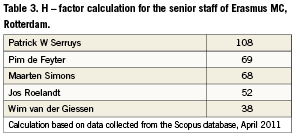

To calculate the H-factor Hirsch writes: “A scientist has index h if h of (his/her) Np papers have at least h citations each, and the other (Np – h) have at most h citations each.” While this might look complicated it is actually quite simple; for example, if one has 10 papers of which the 3rd paper is cited three times and the 4th and further <3, the H-factor of this individual will be 2. Hirsch suggested that for physicists a value of about 18 could mean a full professorship. For cardiology, it has recently be suggested that a professor in clinical cardiology has a H-factor of 20 and that most department chiefs have a H-factor around 40 (this is in the Netherlands, it might be different for other countries). As an indication, we have calculated the H- factor for the senior staff of Erasmus MC, Rotterdam (Table 3).

It is not necessary to calculate one’s own H-factor. There are citation databases providing automatic tools such as Scopus, Web of Science and Google Scholar. They will provide, however, a slightly different H-factor as these databases do have a different coverage. It has been suggested that the smaller databases may be more accurate, but others suggested using the highest H-factor as the other databases might have a large number of false negatives.

Similar as for the IF, this H-factor also receives a lot of criticism, by example it disregards the authors placement in the authorship list, in other words, you could have a high H-factor and have not published a single paper as first author. Furthermore, it is possible to increase your own H-factor by performing a lot of self-citations. It is obvious that a scientist’s H-factor has a better chance to increase if his/her work is published in a journal with a relatively high IF as this improves the chances of being cited.

The H-factor is cumulative, so it will show higher values for those working longer in the field and who have a longer scientific publication track record. This makes it potentially difficult to compare the “real” impact into the area of research for individuals with a different working history. A possibility to correct for this is the so-called M-factor, which was also introduced by Hirsch. This M-factor can be calculated by dividing the H-factor by the “scientific age” of the individual. The scientific age is defined as the number of years since the scientist appeared for the first time on an author list. The M-factor can be thought of as the speed with which a researcher’s H-index increases.

The widespread use of these two scientific evaluation parameters influences our decision as to where to submit our scientific work. However, care must be taken not to use these figures concerning individuals as the one and only parameter and truth. Much other work within medicine other than original research is of high interest as the number of downloaded papers in those other areas shows us. Unfortunately, this might fall beyond the scope of the decision makers as it is not reflected in easy to understand and interpretable scientific evaluation parameters.

Impact factor considerations

Often many scientists are using the impact factor list to determine to which journal they submit their work first. In daily practice this will be most probably the journal with the highest IF. Depending on the advice of the senior author (importance of the scientific message) within the field of interventional cardiology a typical route could be first to submit to a general medical journal, or to a general cardiology journal or to a sub-specialty journal, for instance, EuroIntervention.

There are multiple criticisms regarding the employment of the IF, the editorial policies used or misused to influence the IF and finally the potential incorrect application of the IF.

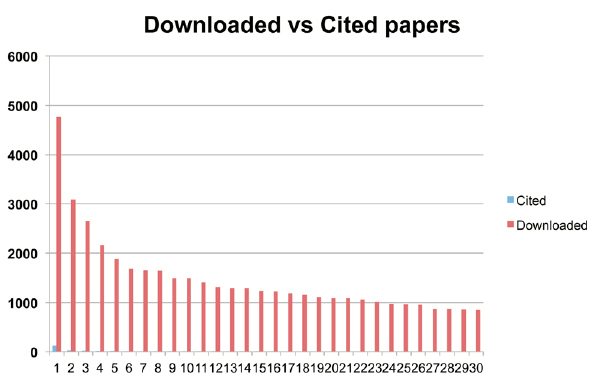

Some research institutes use the IF in the process of promotion on the academic ladder. The IF’s of journals in which candidates have published work are used to determine the importance of their research. Other research institutes propose to distribute their research funds within their institution based, amongst others, on the number of publications of that particular department in the top 25% journals as ranked by their IF in their particular research field (e.g., cardiology, urology, radiology, etc.). For cardiology this would imply that a paper should be published in a journal that has an IF of >3.5 (there are 95 indexed cardiology journals at number 24 has an IF of 3.5, which is the International Journal of Cardiology). Of interest is that European Agencies or national bodies can take into consideration the citations counts categorised by institutions when the process of awarding of grants or funding takes place. (Table 4)

Some journals have been known to manipulate the IF by succumbing to self-citation. Roger Brumback described the famous case of Schutte and Svec who cited all 66 articles published in the Folia Phoniatrica et Logopaedia in the years 2005 and 2006 in an editorial published in the same journal in 2007, the IF jumping from 0.655 in 2006 to 1.439 in 20073. A year later, Thomson Reuters responded that journals will be removed from the JCR when excessive self-citation exists. As recent as 2009, 26 journals were suppressed from the JCR for excessive self- citations, some over 90%. Ironically, Thomson Reuters does not provide any guidance for acceptable self citation, although a figure of 20 % self citation has been recorded of the majority of journals in the JCR4.

Other noteworthy influential aspects of the IF calculation include the provision of free electronic access to a journal, also known as open access, creating a potentially greater availability which tends to raise the IF. Seglen reported that misprints in references could negativitely influence the citation up to as much as 25%5. Ironically poor papers may actually increase the IF as being cited as examples of poor research and naturally a paper with a controversial topic or message would do the same.

Nature, considered to be the top scientific journal, 90% of its IF in 2004 was based on only 25% of its publications and thus the importance of any one publication will be different from, and in most cases less than, the overall number. So the IF is not always a reliable instrument and should be handled with care.

In November 2007, the European Association of Science Editors (EASE) issued an official statement “that journal impact factors are used only – and cautiously – for measuring and comparing the influence of entire journals, but not for the assessment of single papers, and certainly not for the assessment of researchers or research programs.”

Alternatives in the modern age

The IF, as discussed earlier, was created in 1955, but the essence of this measure has hardly changed. However the manner in which scientists read journals has. Website traffic passing the various scientific outlets has grown exponentially and scholarly publications are now more often consumed online than in print. The increase in downloading of publications could gauge in a real world environment the true impact of a paper and since this occurs in real time, it’s impact is also immediate, via ISI one would have to wait two years to learn its impact. This concept of “usage data ” is gradually finding its way into the field of scientific impact has seen by the work of Bollen et al6. Recently the arrival of alt-metrics7 and this group’s call for new online scientific tools have supported the growing interest in alternative forms of scientific appraisal. Priem et al highlighted the growth of scientific blogs and the report finding citations on Twitter claiming that at least a third of scholars are on Twitter8. It is noteworthy that while Internet needed four years to reach 50 million users, Facebook in contrast, reached 100 million users within nine months. Of Generation Y (people born after 1981), 96% use social media. Twitter claims 175 million people (re) send tweets about 1000 per second, currently between 80 and 90 million per day.

IF and EuroIntervention

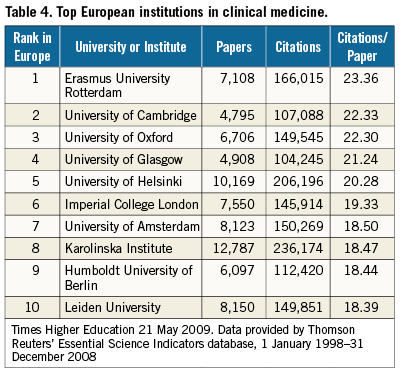

How does this all relate to EuroIntervention? While we are awaiting an official IF it is perhaps interesting to study the top cited papers published in EuroIntervention since 2008, the year that the journal started its indexing in Pubmed (Figure 1).

Figure 1. This figure illustrates the number of times a EuroIntervention paper has been cited in another scientific journal in the period 2008-2010. X axis represents the top 30 cited papers , as an indication the first three papers are Piazza N et al9, paper2:Vahanian A et al10, paper 3: Sianos G et al11.

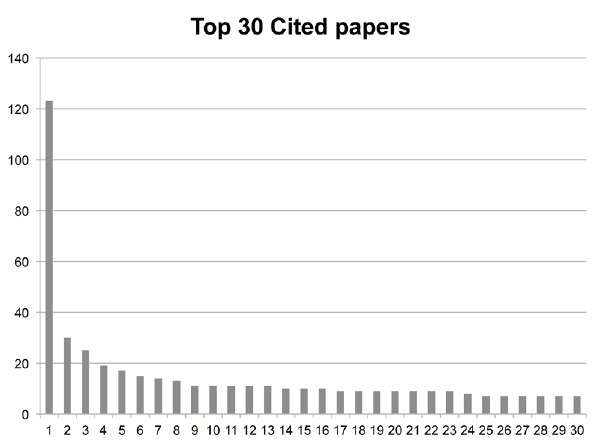

EuroIntervention is a scientific journal indexed in Pubmed and also in the Web-of-Science in the expectation of receiving an IF in the future. What we tend to forget is that it also a practical journal publishing also “Hands-on” and “How-To” papers. These formats (papers), for instance technical reports and the ‘How Should Itreat?” are an interesting introduction to new techniques within the interventional community, however, as these papers do not describe an evaluation of a new therapy they are a much less citable candidate. By publishing these types of papers, the IF of EuroIntervention is impacted, which will have an effect on the future IF influencing the scientific impact of the journal as well as that of the academic group submitting it to the journal as described-above. We could perhaps make a distinction between scientific related and clinical related papers. But how to evaluate the clinical papers on their merits? A possibility to perform this is to examine the number of downloaded papers from the EuroIntervention website (Figure 2).

Figure 2. This figure presents the number of times a paper has been downloaded from the EuroIntervention website. The red columns are the all-time downloads, while the blue columns presents the downloads for the similar period of the cited downloads (e.g. 2008-2010).

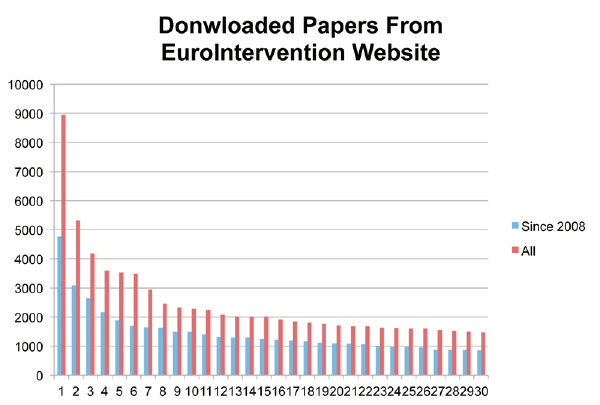

More illustrative how these numbers relate to each other, e.g., the downloads vs. cited, is to present them into one single graph (Figure3).

Figure 3. As can be appreciated the number of downloads exceeds the number of citations by almost on average a 100-fold.

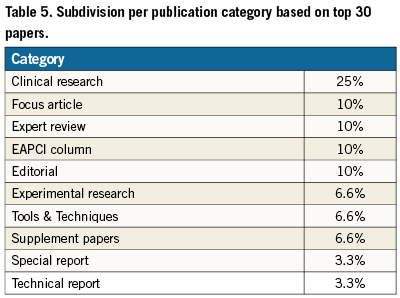

Of interest is to take a look at the subdivision per publication category, of the top 30 downloads of all time, how often it is downloaded. (Table 5) As this table nicely shows, although there is a large interest in the clinical research papers (25%), the other categories are also well downloaded indicating the wide interest of the readership of EuroIntervention for both new science as well for “hands-on”. It also shows that the IF has its limitations to really measure the impact and extent of one’s science.

For EuroIntervention within the field of the cardiology journals, it will always have the natural but distinct disadvantage of being positioned as a subspecialty journal, with a smaller number of investigators from which submissions are drawn. This implies it would be folly to expect an IF in the neighbourhood of the major general cardiology journals. Naturally the growth of the citation rates per paper need “fermentation” and in the initial period will possibly be slow to start off with. Obviously editors also hope to find that one visionary paper that may be looked down upon at publication however due to the dynamics of time, it’s true importance is recognised much later.

And finally, the paradox of the IF –the larger numbers of papers published, the lower the impact factor. While subconsciously bearing the IF in mind, the acceptance rates are ever decreasing, for EuroIntervention currently at 33%, with submissions up from 12% compared to 2010. Starting in 2005 with four publication per year, slowly increasing each year, the Editors have now reached the achieved publication plateau for the coming years which is advantageous for the IF, namely monthly publications since January 2011. This dominator in the IF calculation will remain stable for the coming years so as to avoid the nightmare scenario as seen by other young journals entering the Web of Science, i.e., starting with a respectable IF only to increase the publication schedule on the back of this first success and hence seeing the second IF decrease.

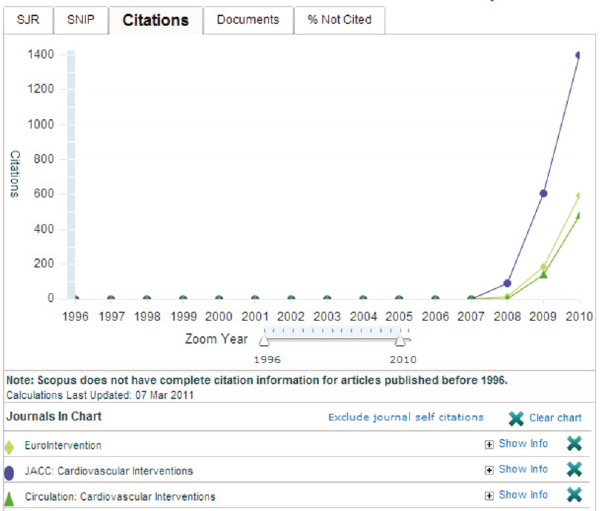

And finally, while in the waiting stage, for the IF, Figure 4 based on data from March 7, 2011 collected from Scopus at least gives us an indication of where EuroIntervention finds itself amongst our peers, noting that the other two journals also await the IF.

Figure 4. The position of the new interventional journals awaiting the IF based on data collected in Scopus.

Conflict of interest statement

The authors have no conflict of interest to declare.